Migrating your workloads to the cloud is the start of a journey with numerous benefits, including unparalleled scalability and flexibility. Cloud computing is an invaluable tool for businesses of all sizes. Moving your workloads to the cloud allows you to take advantage of these benefits and conduct a more thorough cost analysis than using on-premises resources.

DNX, powered by AWS, has helped many customers unlock their full business potential and achieve their goals. Modernising your applications, infrastructure, and data leads to short, medium and long-term benefits from both technical and business perspectives. Today, we will share the lessons we have learned while modernising several large monolithic applications so that you can benefit from our rights and wrongs.

Context

.NET has changed and evolved so much since the creation of the cross-platform .NET Core (now just called .NET). Over the years, Microsoft and the .NET team have done, and are still doing, a fantastic job. While it can be great to be a .NET developer nowadays, unfortunately not all .NET developers are lucky. Many companies and developers are still stuck with older and unsupported framework versions.

Moving away from the .NET Framework and Windows Operating System can have advantages like reducing costs and improving the developer experience. Additionally, porting applications to .NET can give access to new technologies and tools, as well as better integration with other systems or services. This can increase efficiency and productivity and help avoid security vulnerabilities and compliance issues that arise from using old software.

In 2022, DNX completed a Windows Modernisation project for a medium-sized company operating in the tech industry. The project was split into 3 phases: Windows Discovery, Pre-modernisation, and Modernisation.

The main goal of the Windows Modernisation project was to migrate a large codebase consisting of 62 C# projects built using .NET Framework 4.6.1. The code was organised in a single Git repository, including 16 applications and 46 class libraries. The applications included two ASP.NET MVC projects, six ASP.NET API projects, six console applications, and two Windows services.

A team of five Developers, two Quality Assurance (QA) engineers, and one Delivery Lead dedicated eight months to complete the migration of the entire codebase. The migrated applications were large and complex, requiring careful planning and attention to detail to ensure a successful migration.

The team was led by DNX’s Delivery Lead, and was composed of both DNX and customer representatives. This collaborative approach not only saved time but also ensured that everyone was on the same page throughout the project. It also provided an opportunity to upskill the customer’s team and minimise risks during and after the project.

Windows Discovery

DNX is a specialised AWS Windows partner that offers Windows Discovery to help companies quickly and confidently migrate and modernise their existing Windows workloads to the AWS cloud. The program provides a comprehensive set of resources and services to help customers understand the benefits of the cloud and how to best prepare for this journey. Customers receive access to AWS experts, cost estimators, migration tools, and other resources to help them plan, build, and manage their Windows environment on the AWS cloud. There are also opportunities to save costs by migrating from .NET Framework to .NET and using Linux to power applications instead of using the Windows Operating System.

Windows Discovery is designed to help customers understand the value of the cloud and guide them through the migration process. During this phase, the DNX team thoroughly assesses the customer’s current environment to identify workload modernisation opportunities that can benefit both business and technical areas.

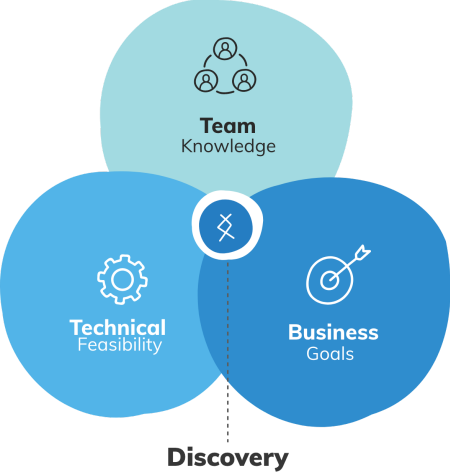

The Windows Discovery program includes a comprehensive set of workshops to create a roadmap for a complete application modernisation strategy on AWS, using modern cloud-native concepts. It connects every aspect of a customer’s business to reduce risks and increase the reliability and control of their processes. Windows Discovery emerges at the end of a series of human-centred workshops that hits the overlap of these three lenses. This all-hands process leads businesses to experience the benefits of migrating from .NET Framework to .NET.

Windows Discovery: An all-hands process

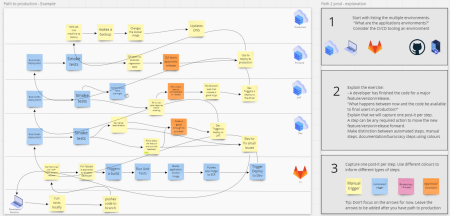

During the Discovery phase, DNX and the customer’s team held workshops to review and enhance their product. These workshops included product demonstrations, code walkthroughs, event storming, and discussions on the Path-to-Production, Is/Is Not – Does/Does Not, and RAID (Risk, Assumptions, Issues, Dependencies). These sessions allowed us to gather different perspectives, evaluate the code quality, review the product’s deployment and release process, and identify potential risks, assumptions, issues, and dependencies that may be encountered during the modernisation process.

Example of a Path-to-Production workshop

These workshops were essential for understanding the key elements to be addressed during the modernisation phase. To achieve the best alignment of expectations, we focused on having a mix of technical and business stakeholders in each workshop. This enabled us to create a customised modernisation roadmap based on the customer’s needs.

As part of this phase, we conducted a comprehensive analysis of the client’s codebase, evaluating factors such as dependency injection, NuGet package dependencies, class complexity, cyclic dependencies, and any other potential issues that could complicate the migration. Additionally, we conducted quick proof-of-concept tests to measure the typical framework modernisation in the client’s codebase, providing more accurate estimates for project duration and effort.

At the end of this phase, we delivered a detailed project roadmap ready for implementation, project deliverables over time, and how to avoid risks and costly mistakes. We also provided a customised execution plan so the customer could understand the most efficient way to implement their project with strategic and detailed outline tasks, resource metrics and runtime.

Pre-modernisation

The pre-modernisation phase was offered to ensure a smooth and faster migration during the Windows Modernisation phase. During the pre-modernisation phase, the application codebase and development process was updated according to the roadmap proposed in the previous phase that contained Microsoft’s recommendations when migrating applications to .NET.

With all external and internal dependencies identified, we began making everything, as much as possible, compatible with .NET 6. The first step was migrating from NuGet packages.config file to PackageReference settings in the project file (Visual Studio can assist you with this task). Then, we updated all NuGet packages to the most recent version available, and for cases where the package was deprecated or unsupported by .NET 6, we replaced it with an alternative.

In the second step, we retargeted all the projects to the latest version of the .NET Framework to ensure the availability of the latest API alternatives. We also upgraded them to the latest project file format, known as SDK-style projects. This new project file format was created for .NET Core and beyond but can be used with .NET Framework. Having your project file in the latest format gives you a good base for porting your app in the future.

An important step to apply before upgrading your .NET projects to the

SDK-style format is to delete all hidden/excluded C# files in your Solution directory (this PowerShell script might help you). Otherwise, all these files will be re-added with many build errors.

The pre-modernisation phase was vital in migrating the codebase to .NET 6. We gained a comprehensive understanding of the codebase, which later helped us avoid costly mistakes or delays caused by incompatible code or architecture. Careful planning and preparation during this phase saved us time and effort in the long run. Additionally, this phase was essential in providing training and upskilling opportunities for the customer’s development team.

Windows Modernisation

In the Windows Modernisation phase, we applied the knowledge and understanding we had gained in the previous stages to update the application’s architecture and codebase to comply with .NET 6 and its new features. We tested and debugged the application to ensure it functioned as expected. Additionally, we focused on improving the application’s performance and security through code refactoring and implementing security protocols. The ultimate goal of this phase was to bring the application up to modern standards, ready for automated deployments on Linux.

From the start of the project, we considered the company’s Business as Usual (BAU) operations. As a result, we gathered insights on strategies for branching, releasing new features and bug fixes, and lead time for changes to be deployed to production through workshops such as Path-to-Production. Based on this information, we developed a solution incorporating a straightforward sprint management process, ticket creation, pull request submission, reviews, and branching strategies. As the project progressed, we refined this process, particularly when addressing essential business needs and introducing new features to minimise merge conflicts while aligning with BAU operations.

We also employed various techniques to improve parallelisation and speed up the porting process for all libraries and applications. We will discuss these techniques in the following sections.

Use tools to assist when porting your applications

You can simplify migrating an application from .NET Framework to .NET by using tools that automate certain aspects of the migration. However, it’s important to remember that porting a complex project is still a complex process, even with the assistance of these tools.

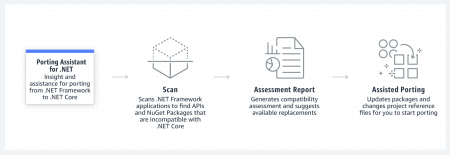

AWS Porting Assistant for .NET is a helpful tool for analysing and migrating .NET Framework applications to .NET Core on Linux. It scans your application and generates a compatibility assessment, providing valuable insights and guidance for porting it from .NET Framework to .NET Core. This helps streamline the migration process and achieve a faster and more efficient port.

How AWS Porting Assistant for .NET works – Image from AWS

.NET Upgrade Assistant is a command-line tool designed to help upgrade .NET Framework applications to .NET. It can be used for various types of .NET Framework apps, including Windows Forms, WPF, ASP.NET MVC, console, and class libraries. After running the tool, additional effort may be needed to complete the migration. To help with this, the tool installs analysers and integrates them with other tools.

The try-convert tool is a .NET global tool that can convert projects or entire solutions to the .NET SDK, including migrating desktop applications to .NET. However, it is not recommended for projects with complex build processes involving custom tasks, targets, or imports. For more information, refer to the try-convert repository on GitHub.

.NET Portability Analyzer is a tool that analyses assemblies and generates a detailed portability report. This report indicates missing .NET APIs in applications or libraries that need to be ported to the specified targeted .NET platforms.

The Platform compatibility analyzer analyses whether your application uses any APIs that will throw a PlatformNotSupportedException at runtime. It is unlikely to detect these APIs if you are migrating from .NET Framework 4.7.2 or higher, but it is still advisable to perform this check.

There is also a new and still experimental tool called Microsoft Project Migrations which can be helpful when porting ASP.NET projects. Its goal is to help with incremental migration from ASP.NET Framework to ASP.NET Core. Microsoft Project Migrations uses the Strangler Fig Pattern to guide migrating from an old, legacy system to a newer, updated one. You can find out more about this and other delivery patterns here.

In conclusion, several tools are available to help migrate .NET Framework applications to .NET. AWS Porting Assistant for .NET stands out as a handy tool as it provides valuable insights and guidance for the porting process, helping to streamline the migration and achieve a faster, more efficient port. Other tools are available for specific needs, such as converting projects or entire solutions to .NET, generating portability reports, or checking for APIs that may throw exceptions at runtime. It is essential to review all of these options and determine the best fit for your needs.

Be aware of unavailable technologies

There are a few technologies in the .NET Framework that are not available in .NET, as pointed out by Microsoft in their article on porting from the .NET Framework to .NET. These technologies include:

- Application domains: Creating additional application domains is not supported. Instead, use separate processes or containers for code isolation.

- Remoting is no longer supported; instead, use System.IO.Pipes or

MemoryMappedFile for simple IPC or frameworks like StreamJsonRpc,

ASP.NET Core with gRPC, or RESTful Web API services for more complex scenarios. - Code access security (CAS): CAS was a sandboxing technique in .NET Framework, which was deprecated in 4.0. It is no longer supported; use security boundaries provided by the OS such as virtualisation, containers, or user accounts.

- Security transparency: No longer recommended for .NET Framework apps; instead, use OS security boundaries such as virtualisation, containers, or user accounts.

- System.EnterpriseServices: System.EnterpriseServices (COM+) is not supported in .NET.

- Windows Workflow Foundation (WF): WF is not supported in .NET. An alternative is CoreWF.

For more information about these unsupported technologies, see .NET Framework technologies unavailable on .NET Core and .NET 5+.

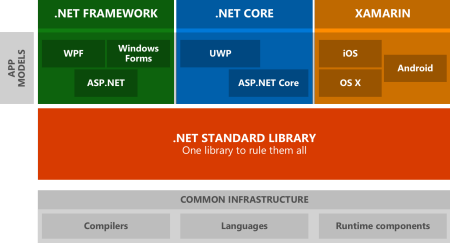

Multi-targeting: a way to Support multiple .NET versions

Microsoft recommends using a .NET Standard class library when creating a new project to ensure compatibility with various .NET versions. By using .NET Standard, your .NET project automatically gains cross-platform support. Unfortunately, this may not always be possible in specific scenarios, and you may need to include code that targets a particular framework. In these cases, a multi-targeting approach for SDK style projects can be helpful.

Multi-targeting is a way of supporting multiple .NET versions within a single application or library. It allows developers to build code that can run on various platforms without maintaining multiple codebases. Multi-targeting is a common technique used by many author libraries, which will enable you to use conditional compilation in your code and call framework-specific APIs.

For more information about how .NET compares to .NET Standard, see .NET 5+ and .NET Standard.

From Microsoft docs.

We began updating the most popular and referenced class library projects targeting the .NET Framework version to use multi-targeting, especially those with the least amount of project references. The idea was that by starting with these projects, the impact of any changes would be the most far-reaching and, therefore, the most beneficial. In addition, this approach enabled us to incrementally update the app without breaking the others.

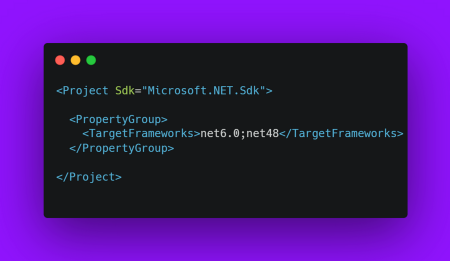

It’s pretty simple to add multi-target support to your project. Assuming that your project already uses the SDK-Style format, you only need to edit the .csproj file to support the target frameworks. For example, change

<TargetFramework>net4.8</TargetFramework> to

<TargetFrameworks>net6.0;net48</TargetFrameworks> .

Make sure you change the XML element from singular to plural (add the “s” to both the open and close tags).

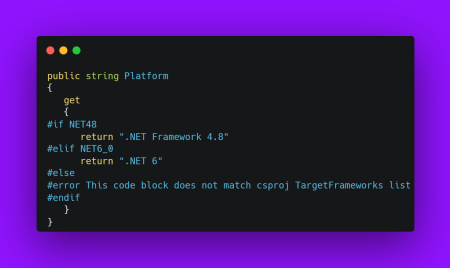

Now, if you have any .NET Framework-specific code that doesn’t work with .NET 6, you can use conditional directives #if NET48 or #if NET6_0 to separate each version of the target framework-dependent code.

By utilising multi-targeting effectively, our team was able to save a significant amount of time during the extensive modernisation project. It enabled us to divide the work into smaller and incremental tasks. As a result, we got more people involved and made progress more efficiently. This strategy proved to be highly effective in helping us to achieve our goals.

How to use C# using alias in your favour

C# using alias directive is a handy technique to reduce the amount of written code. It allows you to assign a keyword to a longer name and use the keyword in its place. Even the C# language itself makes usage of aliases:

string is an alias to a class called System.String , int is an alias to a struct called System.Int32 , and so on.

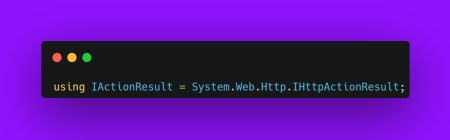

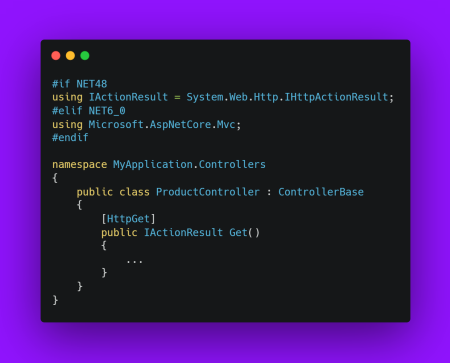

This technique can also be beneficial when supporting multi-target, for uses such as avoiding code duplication separated by conditional compilation directives across the code. For instance, you can use C# using directive alias to rename parts of the .NET Framework that were renamed or not ported to .NET (see example below):

The interface IHttpActionResult does not exist in .NET. However, the corresponding interface is called IActionResult . Therefore, instead of creating a conditional compilation directive for each IHttpActionResult reference inside our controller class, we can add this using alias once. Then, all the code below will work for either version of the framework.

Within your app, you can now write conditional code using preprocessor directives to compile for each target framework:

Using the alias directive in the header of your C# class and replacing all references inside the class with the new alias can make it easier to maintain both versions and eventually decommission or delete the old version once the code is fully migrated to .NET. See more about this topic in Microsoft docs.

Be aware of significant breaking changes

The migration from .NET Framework to .NET 6 brings a significant number of breaking changes, including the introduction of new features and changes in syntax. In the following sections, we will delve into the specific issues we encountered during our migration process and discuss the importance of carefully considering these changes. We will also guide you on effectively addressing and avoiding potential breaking changes during your migration.

Nullable reference types

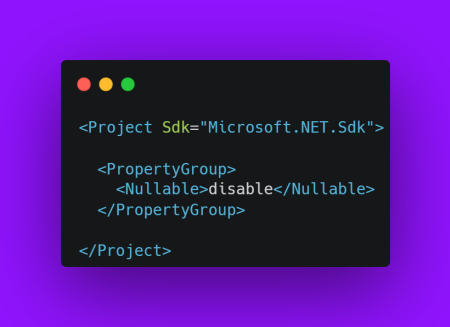

Nullable reference types are a feature in C# 8.0 that allows you to indicate that a reference type variable can have the value of null. We do not recommend that you enable Nullable reference types immediately, as it can add uncountable warnings and cause a lot of bugs in your code. However, by disabling it, we can try to keep the same behaviour as in .NET Framework.

Nullable reference types can and will also impact your Model bindings.

- If you have a property on a model class that is a nullable reference type and don’t provide a value for it when binding the model, the model binder will set the property to null.

- If you have a property on a model class that is a non-nullable reference type and doesn’t provide a value for it when binding the model, the model binder will raise a

ModelBindingException.

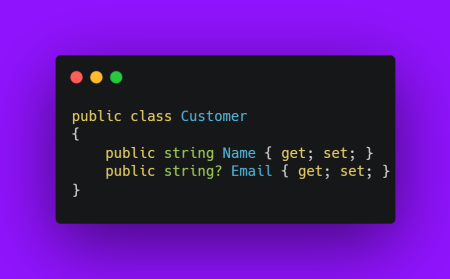

For example, consider the following model class:

The Email property will be set to null if you post data with only ‘Name’ provided. In contrast, if you have a non-nullable reference type, the model binder will raise a ModelBindingException when the property is missing in the posted data.

It’s important to note that nullable reference types can be helpful when the model binding is done automatically by assisting you to identify potential null reference exceptions. However, suppose you’re working with a model from a different source, like a database. In that case, the same nullability constraints are not present, and you might want to consider using the [AllowNull] attribute to ignore the nullability constraints when binding the model.

In summary, testing before enabling nullable reference types for the project is essential. Then, if possible, enable it little by little until you are sure that it will not cause you any problems.

Model binding

Model binding in .NET has been significantly redesigned and reimplemented, resulting in several breaking changes compared to previous versions of the .NET Framework. In .NET Framework, model binding is implemented using the System.Web.Mvc namespace, while in .NET, it is implemented using the Microsoft.AspNetCore.Mvc namespace.

The main difference between the two is that the model binding in .NET is more flexible and powerful than in the .NET Framework. For example, in .NET, you can use attributes, such as [FromBody] and [FromQuery], to specify the data source for the model. In contrast, in .NET Framework, the data source is determined by the type of action method parameter. Additionally, the model binding in .NET is more extensible, allowing for custom model binding and validation, while in .NET Framework, this functionality is more limited.

There are several known breaking changes between .NET Framework and .NET Core related to model binding:

- The

System.Web.Mvcnamespace is not available in .NET, so any code that references this namespace will need to be updated to use the

Microsoft.AspNetCore.Mvcnamespace instead. - In .NET Framework, model binding is performed by the

DefaultModelBinderclass, which is unavailable in .NET. Instead, model binding is performed by the default implementation of theIModelBinderinterface. - In .NET Framework, model binding is performed based on the type of action method parameter, while in .NET, you can use attributes, such as

[FromBody]and[FromQuery], to specify the source of the data for the model.

It’s important to note that in order to make a smooth transition from .NET Framework to .NET, developers should thoroughly test their applications and make the necessary updates to ensure compatibility with .NET Core.

Re-reading ASP.NET Core request bodies

Sometimes it is necessary to re-read the request body multiple times. For example, you might need to read the request body once to perform validation and then reread it to process the request. In the ASP.NET framework, it was possible to read the body of an HTTP request multiple times using the HttpRequest.GetBufferedInputStream method. However, in ASP.NET Core, a different approach must be used as, by default, the request body is only readable once in .NET.

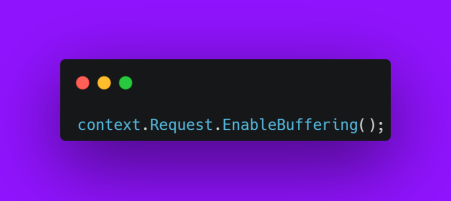

To allow the request body to be read multiple times, you need to enable buffering for the request. This can be done using the EnableBuffering() method, which is available on the HttpRequest object.

For example, you might use code like this to enable buffering for a request:

With buffering enabled, you can read the request body multiple times without issues. Just be aware that enabling buffering can impact performance, as it requires the entire request body to be stored in memory. Therefore, you should only enable buffering in cases where you need to read the request body multiple times.

Automatise repeatable tasks

Once most of the architecting and configuration work is complete, the remaining tasks can often be automated. Automating repetitive tasks during migration is a crucial step in streamlining the entire process and ensuring that it is consistent, reliable, and efficient. In addition, by using automation, businesses can be confident that the migration will be completed on time and with minimal errors.

We suggest you follow these steps when automating:

- Define the goal of automation.

- Identify the tasks that can be automated.

- Create a plan for automation.

- Implement the automation.

- Monitor and adjust the automation as needed.

Roslyn is the .NET compiler platform that enables developers to automate repeatable tasks with the help of its APIs. Developers can use Roslyn to create custom code analysis tools, build code refactoring tools, and develop custom code generators for their projects. It also allows them to use the C# and Visual Basic compilers as services to build their own languages, host the compilers in their own applications, and build code analysis tools to detect potential issues with the code. Roslyn enables developers to automate tasks such as finding code smells, refactoring code, and generating code snippets.

Lizzy Gallagher, a Senior Software Development Engineer at Mastercard, wrote a blog post about refactoring C# code with the Roslyn Syntax API. She explained how she was able to automate the migration of 200 applications to .NET Core. You can find her blog post here.

Encourage brainstorming sessions

Brainstorming sessions are a great way to generate creative solutions and new ideas. Still, it’s important to create a supportive environment where all participants feel comfortable sharing their thoughts without fear of judgement. To have productive brainstorming sessions, set an agenda or goal, actively encourage participation from all members, and allow enough time for reflection and idea development.

At the beginning of the session, reviewing any relevant background information that participants may need to understand the problem or project at hand can be helpful. It is also important to establish ground rules to ensure that all participants are respectful and listen to each other’s ideas.

Furthermore, allowing participants to take breaks and opportunities to offer feedback is essential. Finally, after the session, it can be beneficial to review the ideas generated and create an action plan to follow up on them.

Brainstorming sessions help the team unblock issues quickly to develop better ways to move faster. They are a great way to generate new ideas and identify potential solutions to complex problems.

Testing

Testing is crucial when migrating an application from .NET Framework to .NET 6. This is because the migration process often involves making significant changes to the codebase, which can introduce new bugs or cause existing features to break.

One of the key benefits of testing is that it helps to ensure that the application is stable and reliable after the migration. This is particularly important if the application is mission-critical or used by many users.

Several types of testing can be used during the migration process, including unit testing, integration testing, and acceptance testing. Each of these tests serves a different purpose and can help identify other issues.

Unit testing is a type of testing that focuses on individual pieces of code or units to ensure that they are working correctly. It can help to identify issues with specific code functions or classes and can be used to validate that changes made during the migration process have not introduced any new bugs.

Integration testing involves testing the interaction between different application components to ensure they work together correctly. This can be particularly useful when migrating an application, as it can help to identify issues that only occur when different parts of the codebase are used together.

Acceptance testing involves testing the application from the perspective of the end user. This can help ensure that the application is functioning as expected and that the changes made during the migration process have not negatively impacted the user experience.

Running tests using multiple target frameworks

Another effective technique we employed in addition to unit and integration testing was multi-targeting. This approach involves specifying multiple target frameworks for our .NET testing libraries.

By using multi-targeting, we were able to run tests in parallel across different framework versions. This proved particularly useful when migrating older applications. It allowed us to quickly and easily compare the functionality of the newly migrated app against the original version. This way, we could ensure that all features were working as expected. In addition, this helped us to ensure that our libraries were cross-platform compatible and that there were no platform-specific issues.

Overall, multi-targeting is a valuable technique for effectively testing your applications and libraries across different framework versions and platforms, as well as ensuring that your code is working as expected and has no compatibility issues.

The Scientist library

The Scientist is an open-source library that allows developers to test code changes, such as refactoring or introducing new features, without affecting live users. Originally developed in Ruby and open-sourced by GitHub in 2014, it has since been adapted to other languages, including PHP, Python, Java, C#, and Go. It has been widely adopted to solve real-world problems and praised for its ability to provide a safe and effective way to test code changes.

When migrating between frameworks, such as moving from .NET Framework to .NET 6, compatibility and architectural changes can often lead to unexpected bugs and errors. With the Scientist library, developers can run their code on both frameworks, compare the results, and ensure that the migration process does not introduce any unintended issues. It also allows running new and old code in parallel, which can be useful as a fail-safe in case of an emergency rollback.

In summary, the Scientist library is essential for developers working on migration projects. It provides a safe and reliable way to test and verify changes, increasing confidence in the migration process and reducing the risk of downtime or data loss.

Do not underestimate the complexity of migration

Migrating to .NET can be complex and daunting and is not a task to be taken lightly. Careful planning and comprehensive knowledge of existing and new frameworks are essential. It is important to understand the differences between the two frameworks, including their respective architectures, programming models, and APIs. The process may involve significant changes to the existing codebase, which can be time-consuming and challenging to manage.

It is essential to understand the implications of the migration process. This includes ensuring that the existing applications and services remain compatible with the new framework and understanding the differences in features, performance, and security between the two frameworks. Additionally, it is crucial to understand the potential implications of the migration process on existing systems and services, such as performance and security.

With proper preparation, knowledge, and management, the migration process can be completed with minimal disruption and maximum success. If assistance is needed, DNX can guide you through the process.

Final thoughts

After a lengthy and challenging process of migrating several monolithic applications from .NET Framework to .NET 6, we gained valuable experience in managing the complexities of large-scale migrations and the importance of thorough planning. We also learned the necessity of robust testing and debugging to ensure the application functions correctly after the migration. These lessons will be invaluable for future projects.

Migrating to .NET 6 can reduce costs by eliminating the need for Windows licences in favour of Linux. As .NET 6 is an open-source platform, there are no licensing fees for the Windows operating system, resulting in cost savings and increased flexibility, as customers can choose from various Linux distributions. This also decreases IT overhead, as there is no longer a need to manage multiple Windows licences.

DNX Solutions has got you covered when it comes to migration. Our experts will assist you throughout the process and ensure your applications are modernised seamlessly, securely, and with minimal disruption. We’ll help you make informed decisions and navigate the process from start to finish, utilising the full potential of AWS services. Trust us to make your migration process a smooth and stress-free experience.

Additional Links for further reading

Additional Links for further reading

Authors

Contact a DNX expert to book a free 15-minute consultation and explore your possibilities for Cloud Migration