Why keep your non-production environments up when nobody is up?

An environment in AWS usually is a group of these resources:

- Load balancers

- EC2 instances (hopefully part of an Autoscaling Group)

- RDS instances

- EBS volumes

- S3 buckets

- Caches, ElasticSearch, etc.

In this tutorial, we will show how to turn off the most costly members of an environment: EC2 and RDS instances.

By shutting it down outside working hours, you can cut by around 50% the total monthly cost of an environment.

EC2s with an Autoscaling Group

For ASGs, we will use the Scheduled Actions feature provided and create 2 actions:

- One to start the instances in the morning by setting the number of instances (desired, max, min) to what they were before, and

- One to turn off all the instances at night by setting the ASG numbers to zero.

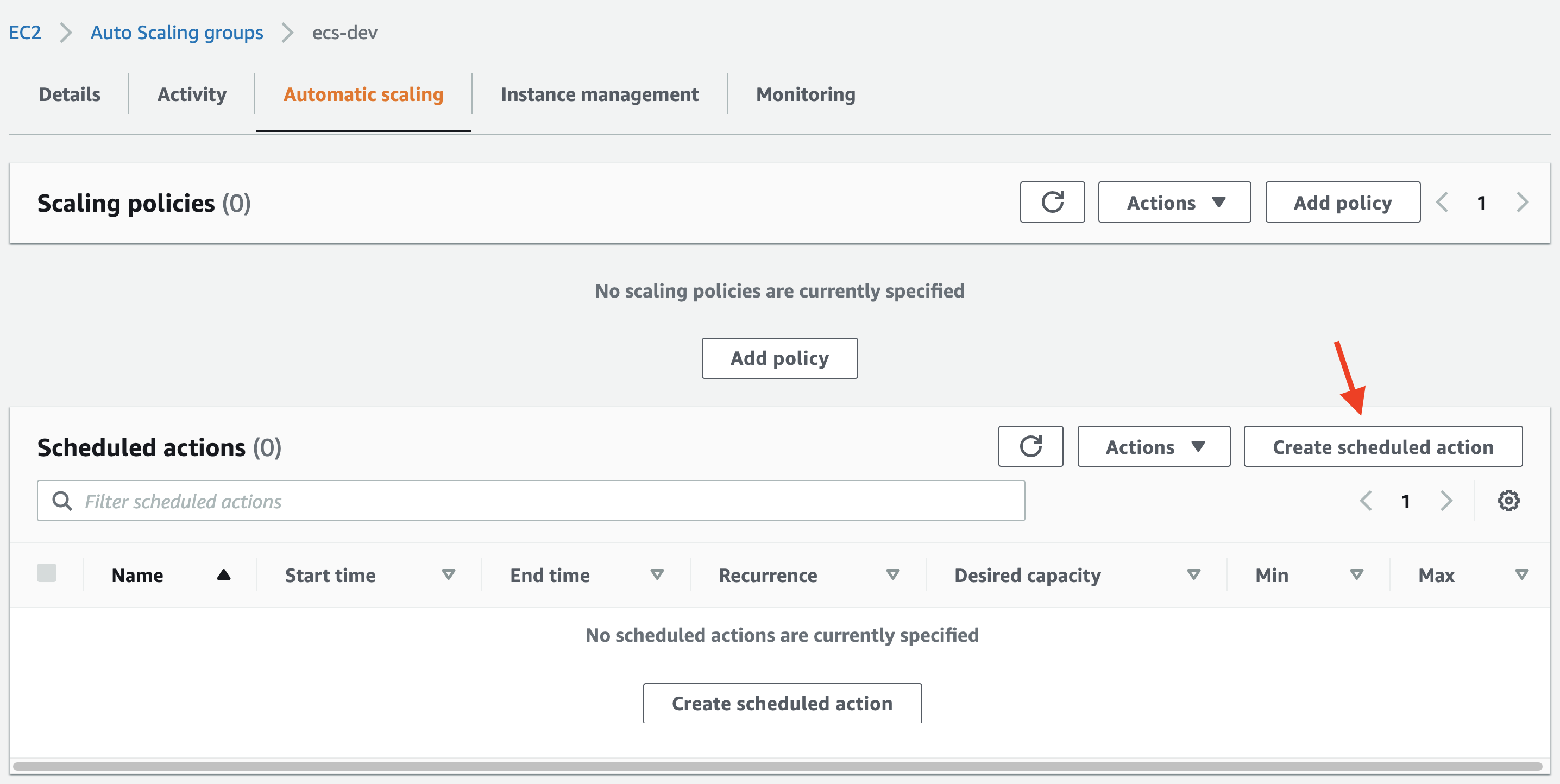

Under EC2 > Auto Scaling groups, select the group for the environment you would like to schedule.

Then click “Create scheduled action”

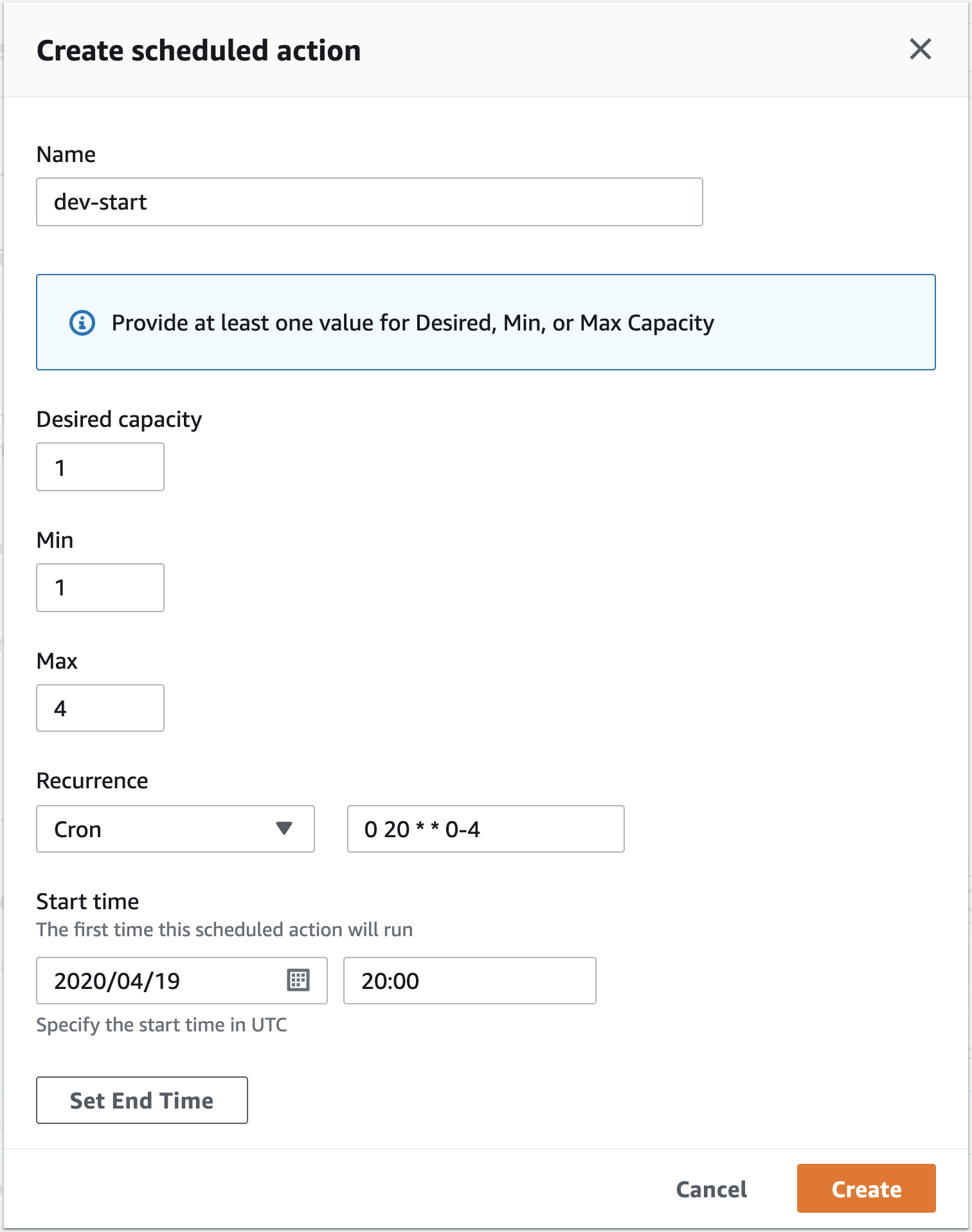

Enter the details like below for the start actionA:

Some caveats:

Some caveats:

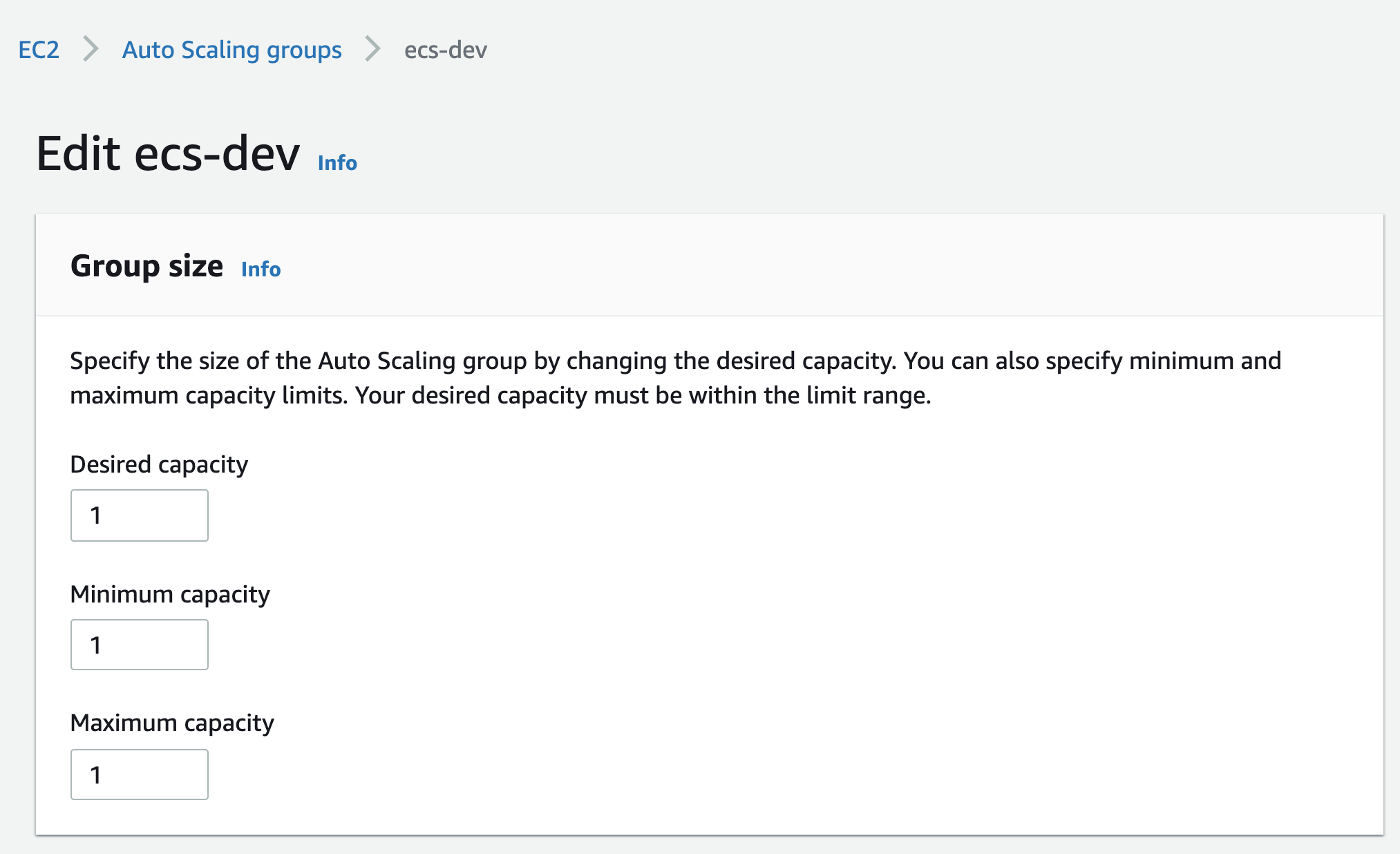

Enter the Desired capacity, Min and Max for the same numbers you have today.

Set a Cron recurrence.

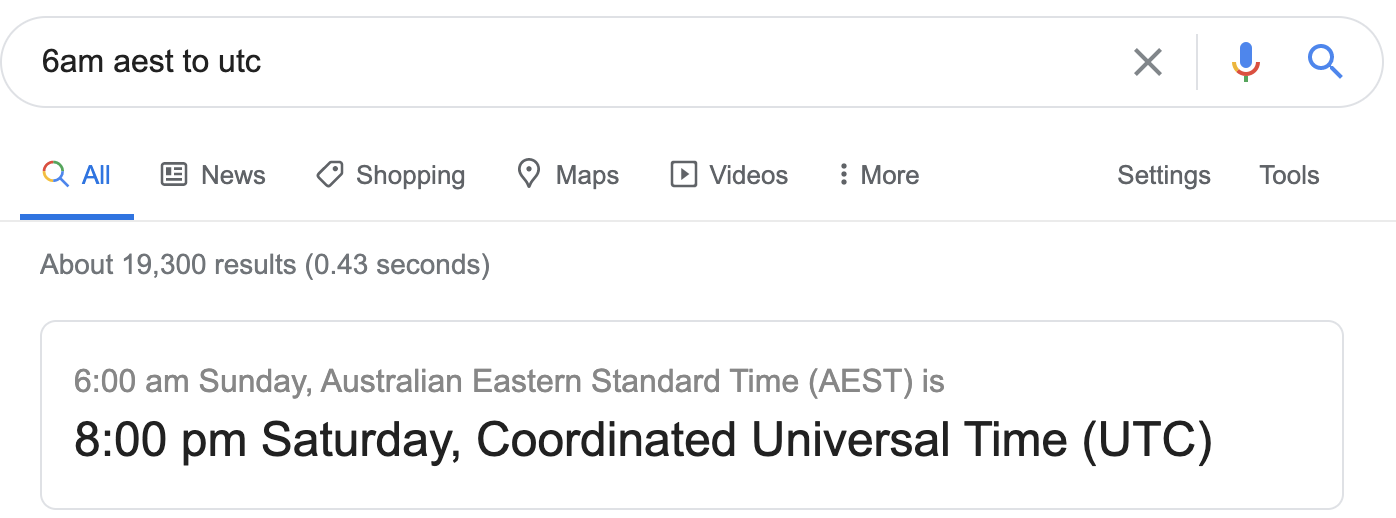

In our example, we would like to start the instances every weekday at 6am, Sydney time. Converting that to UTC, we got:

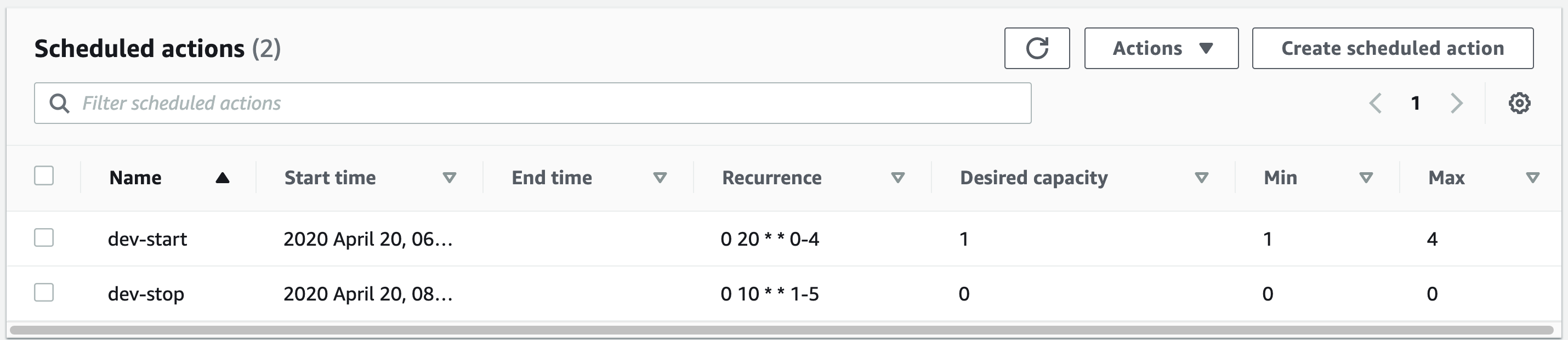

0 20 * * 0-4

Which translates to every Sunday to Thursday, 08:00pm.

It follow the crontab specifications where:

[Minute] [Hour] [Day_of_Month] [Month_of_Year] [Day_of_Week]

Converting 6am AEST to UTC we get 8pm of the previous day.

Being in the previous day, we had to adjust the

Being in the previous day, we had to adjust the [Day_of_Week] field to run from Sunday to Thursday (0-4).

And set the start time for the first occurrence of the schedule.

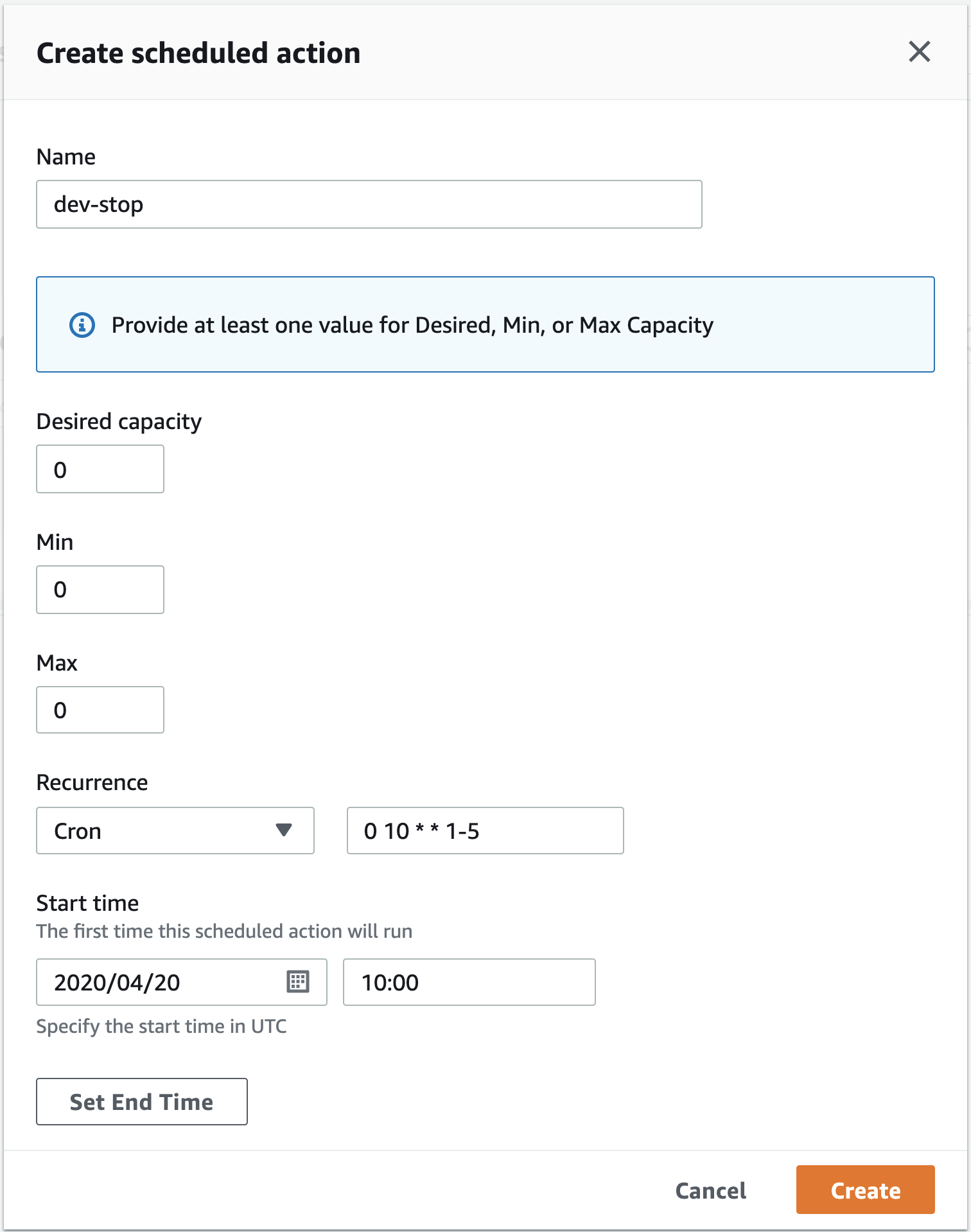

Now for the Stop Action:

Set the Desired capacity, Min and Max to 0.

Set the Desired capacity, Min and Max to 0.

Set a Cron recurrence for

0 10 * * 1-5

That translates to Monday to Friday, 10:00am, UTC time.

This converts to every weekday, 8pm AEST (Sydney) time, which is what we want.

For more examples and documentation, please see AWS docs at https://docs.aws.amazon.com/autoscaling/ec2/userguide/schedule_time.html

The final result should be something like:

To achieve the same using Terraform:

To achieve the same using Terraform:

EC2s without an ASG (a.k.a pets ?) and RDS instances

This section will cover the scheduled start/stop of EC2 and RDS instances. Both use CloudWatch Event Rules with SSM Automation.

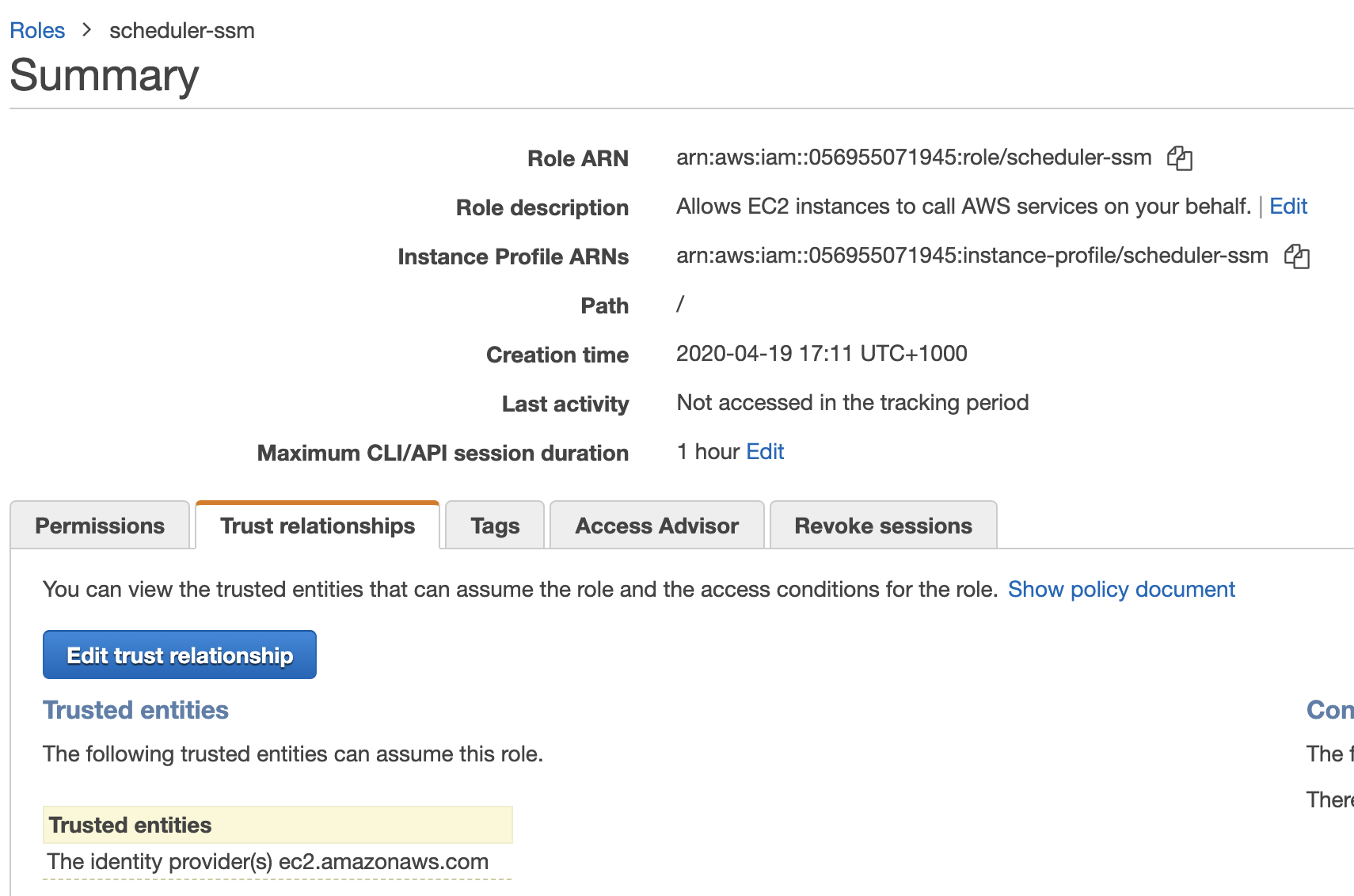

Preparing an IAM Role for SSM Automation

We need to create a role that allows SSM to run Automation documents.

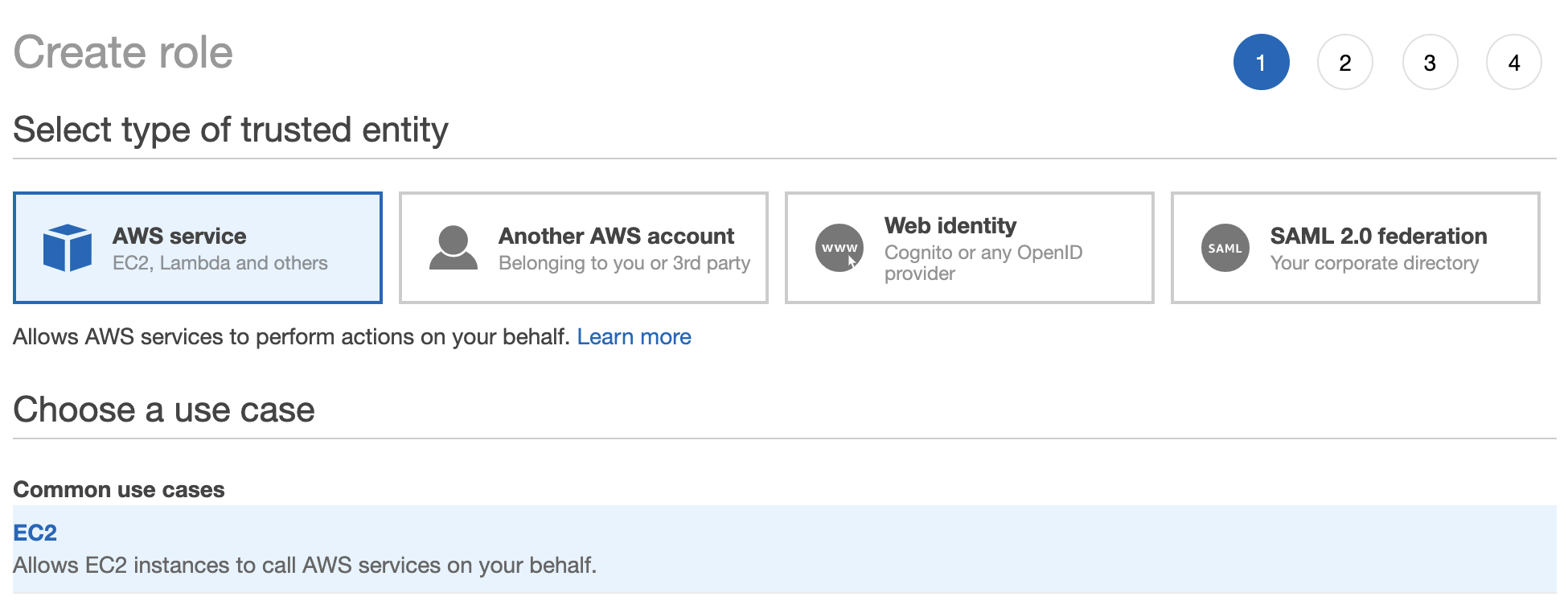

Go to IAM > Roles > Create Role

Select EC2 as the trusted entity (although we will need to change to SSM).

Select EC2 as the trusted entity (although we will need to change to SSM).

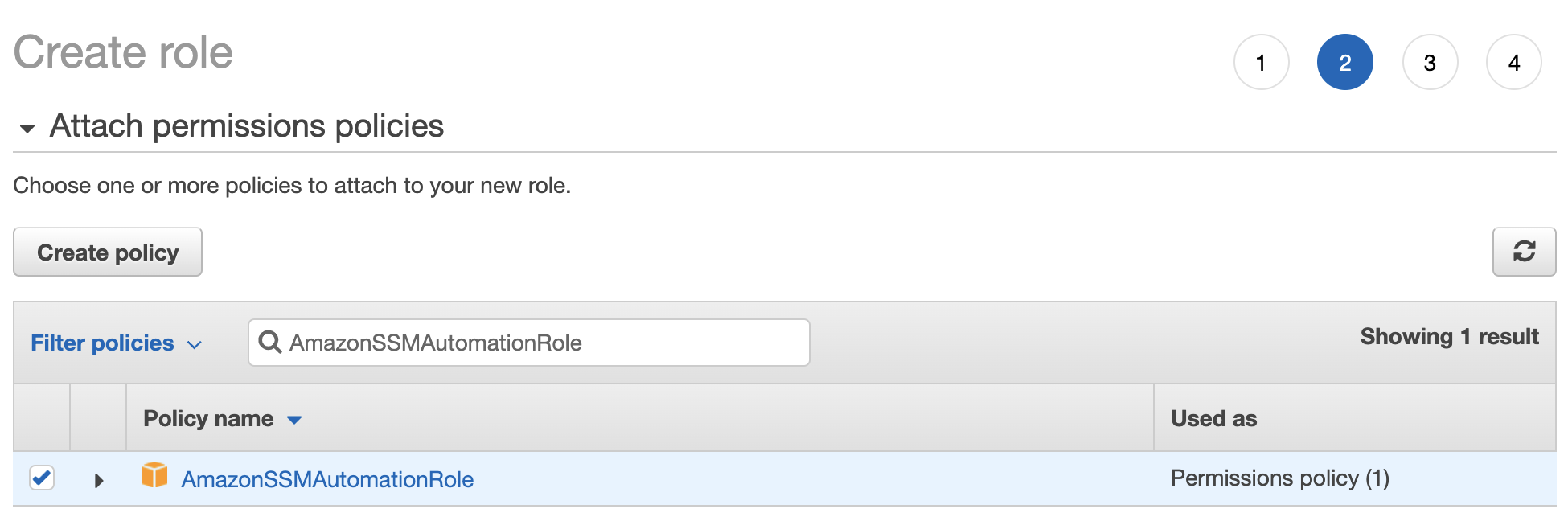

Add the

Add the AmazonSSMAutomationRole policy.

Next, Next and call it scheduler-ssm and save.

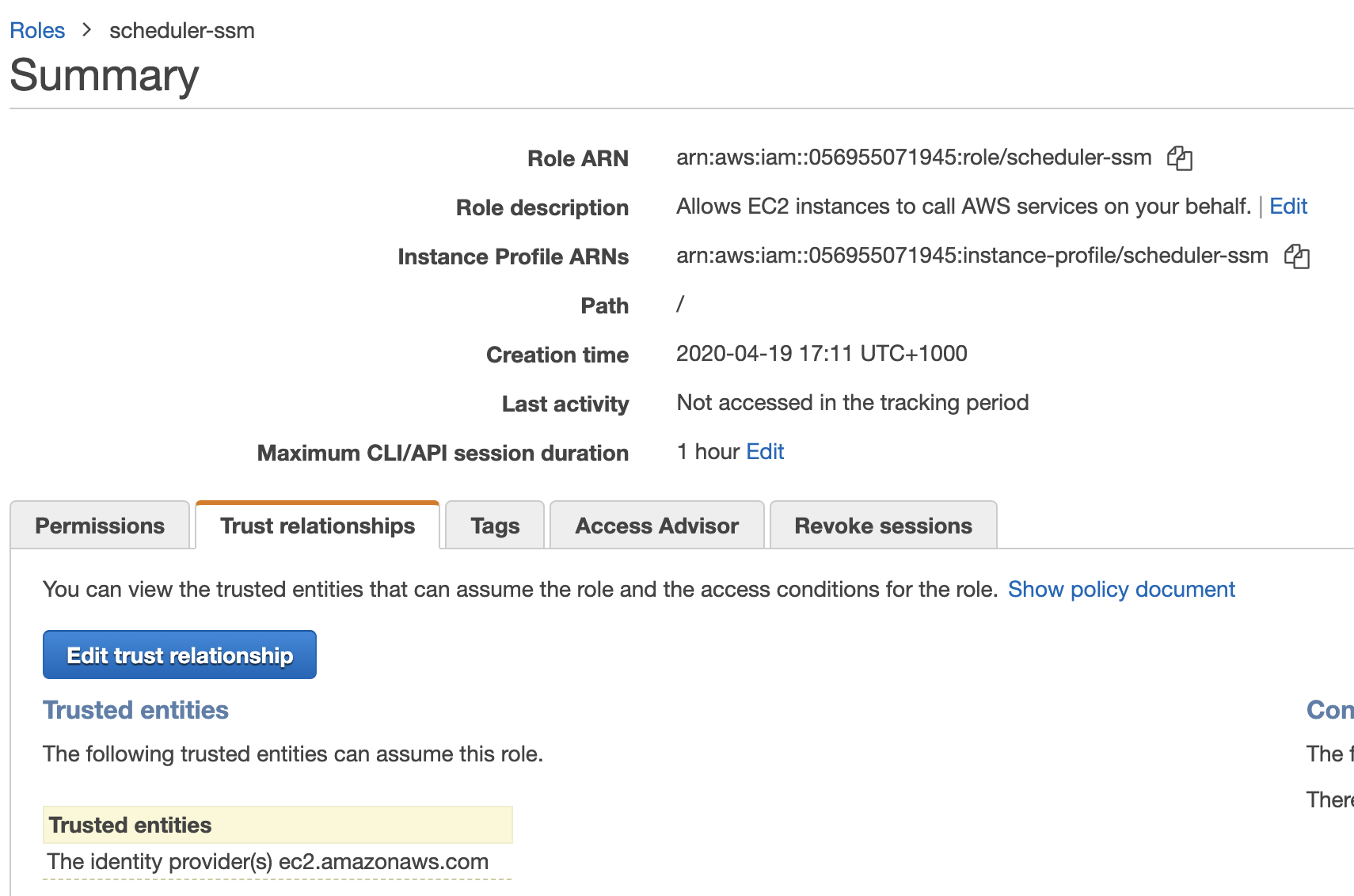

Find your role from the list, edit and click on Trust Relationships tab:

Edit the trust relationship and change from

Edit the trust relationship and change from ec2.amazonaws.com to ssm.amazonaws.com.

Back to Permissions tab, click to Add Inline Policy and add the following policy:

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"rds:StopDB*",

"rds:StartDB*",

"rds:DescribeDBInstances",

"ec2:StartInstances",

"ec2:StopInstances",

"ec2:DescribeInstances"

],

"Resource": [

"*"

]

}

]

}

This will allow SSM Automation to start/stop instances.

Copy the ARN of this role as we will need it later.

Creating the CloudWatch Event Rules

We will use CloudWatch Events to schedule the stop and start of the instance(s).

Be aware that if your data is stored in ephemeral volumes, there might be loss of data. Make sure all your data is stored in EBS volumes.

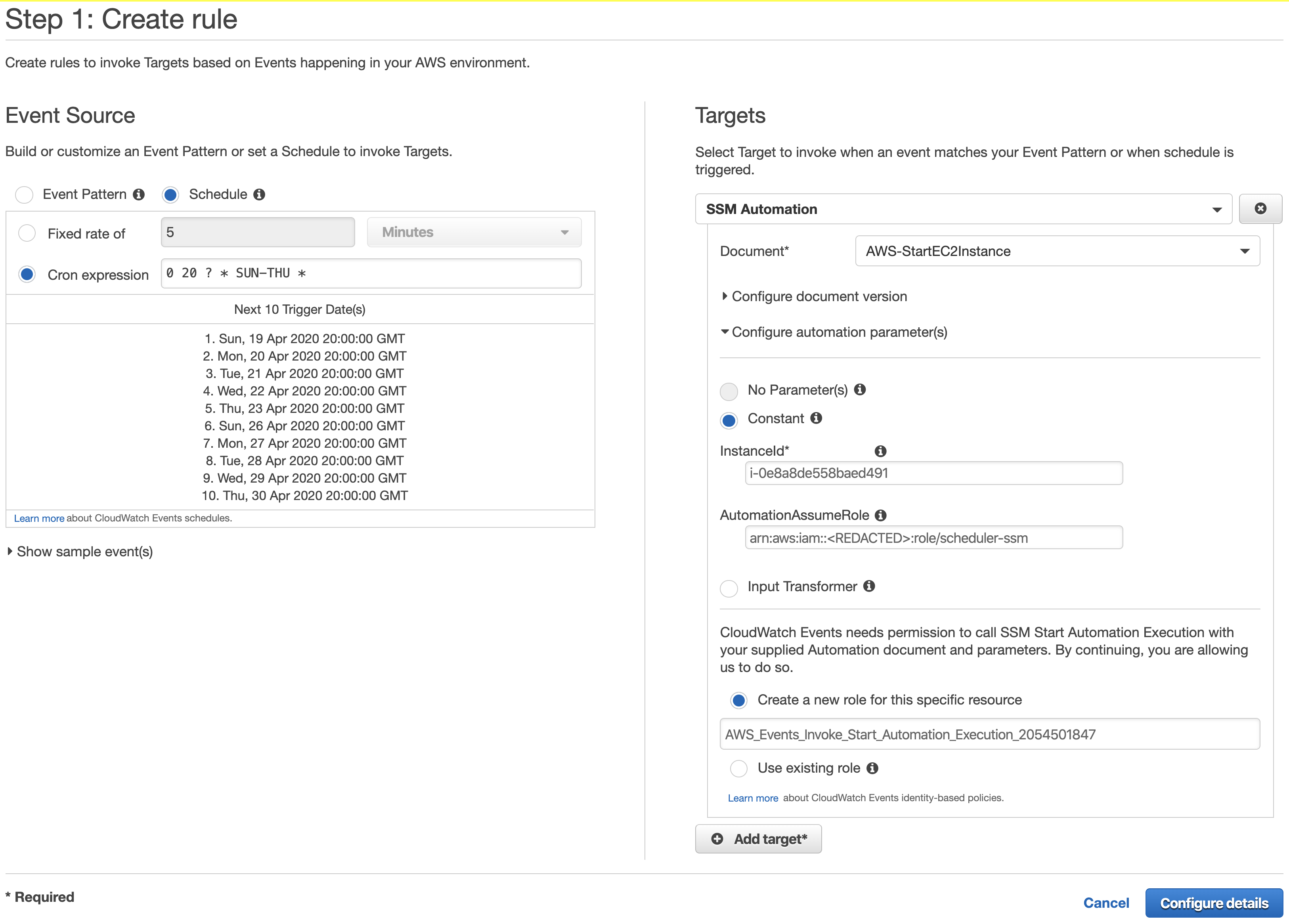

The Start Role

Go to CloudWatch > Events > Rules > Create Rule

We are using a similar Cron expression as previously:

We are using a similar Cron expression as previously:

0 20 ? * SUN-THU *

That will schedule to start the instance at 8pm, Sunday to Thursday. That’s the equivalent of 6am, Monday to Friday on Sydney (AEST) time.

On the Targets section at the right side, select SSM Automation, and use the AWS-StartEC2Instance document for EC2 or AWS-StartRdsInstace for RDS instances.

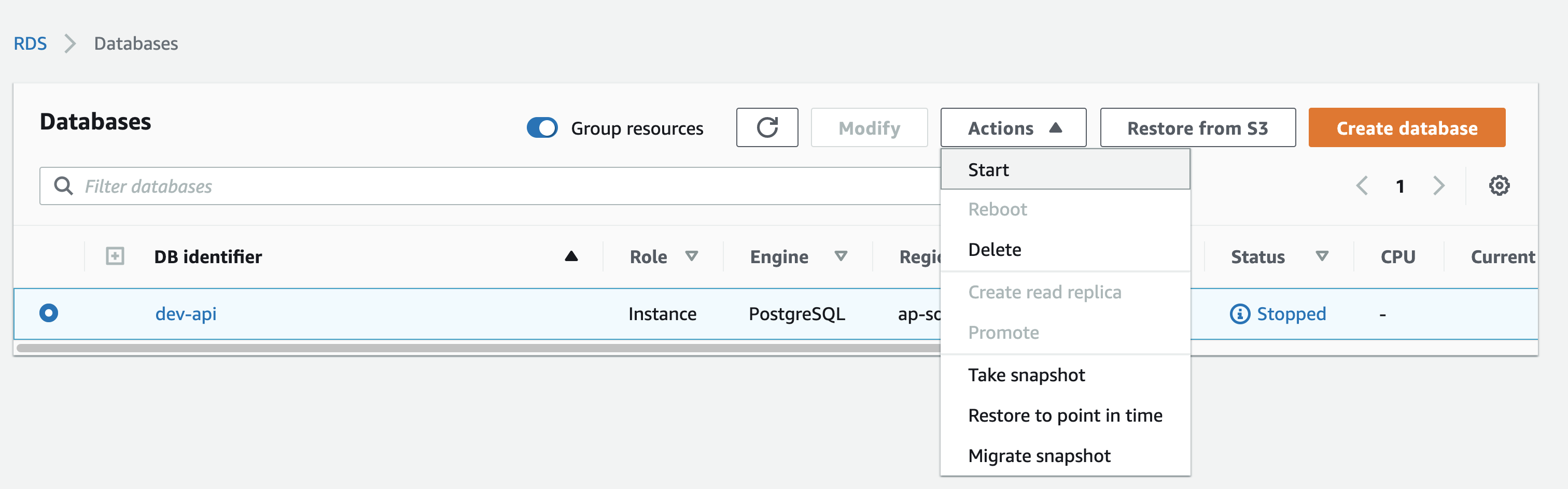

Enter the InstanceId for the instance you would like to start/stop. For RDS, it’s the “DB identifier” that you can find on the RDS console.

On the parameter AutomationAssumeRole, enter the ARN of the role created previously.

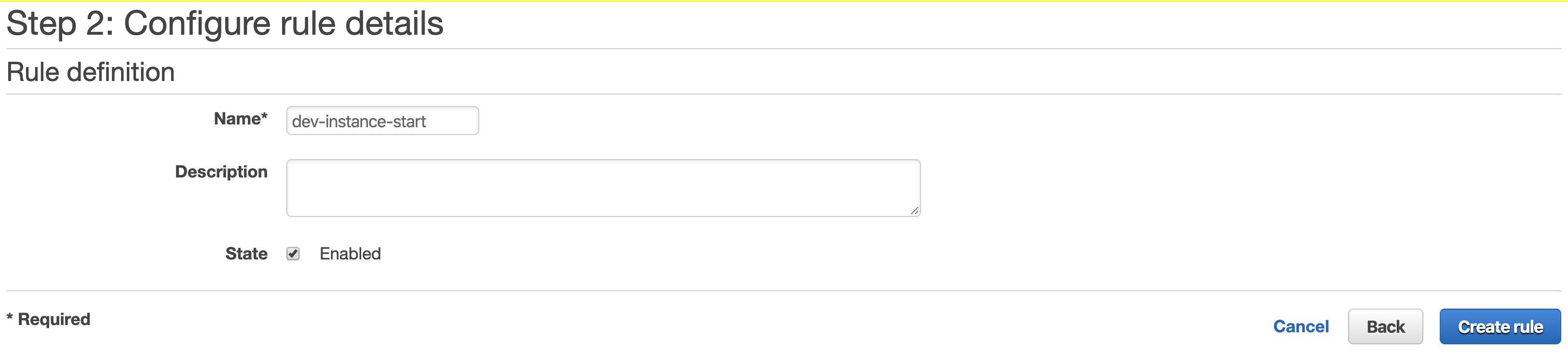

Click Configure details, enter a name for your rule and click Create Rule.

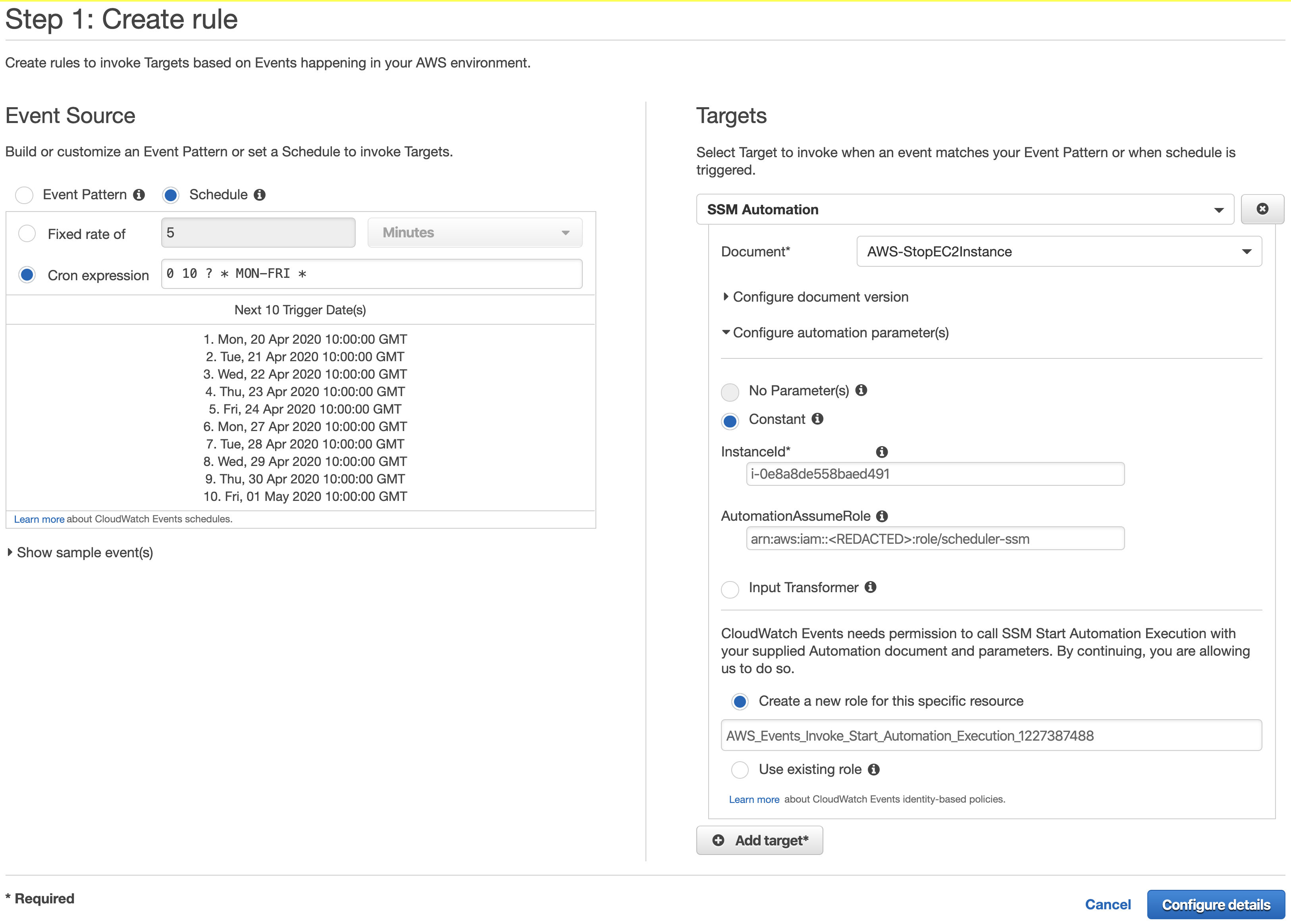

The Stop Role

The Stop Role

Go to CloudWatch > Events > Rules > Create Rule

The Cron expression used is for 10am, Monday to Friday, which converts to 8pm, Monday to Friday, Sydney time.

The Cron expression used is for 10am, Monday to Friday, which converts to 8pm, Monday to Friday, Sydney time.

0 10 ? * MON-FRI *

On the Targets section at the right side, select SSM Automation, and use the AWS-StopEC2Instance document for EC2 or AWS-StopRdsInstace for RDS instances.

Enter the InstanceId for the instance you would like to start/stop. For RDS, it’s the “DB identifier” that you can find on the RDS console.

On the parameter AutomationAssumeRole, enter the ARN of the role created previously.

Click Configure details, enter a name for your rule and click Create Rule.

You might need to repeat the steps above multiple times if you have multiple EC2 and RDS instances.

You might need to repeat the steps above multiple times if you have multiple EC2 and RDS instances.

Using a Terraform Module

To avoid having to do the process above manually for every customer, we automated it internally and released as an open-source terraform module.

The module only automates the RDS scheduling, as we usually don’t create standalone EC2 instances for our customers, but can be easily adapted by changing the SSM Automation Document it uses.

Ok, but what if someone needs the environment up during the weekend?

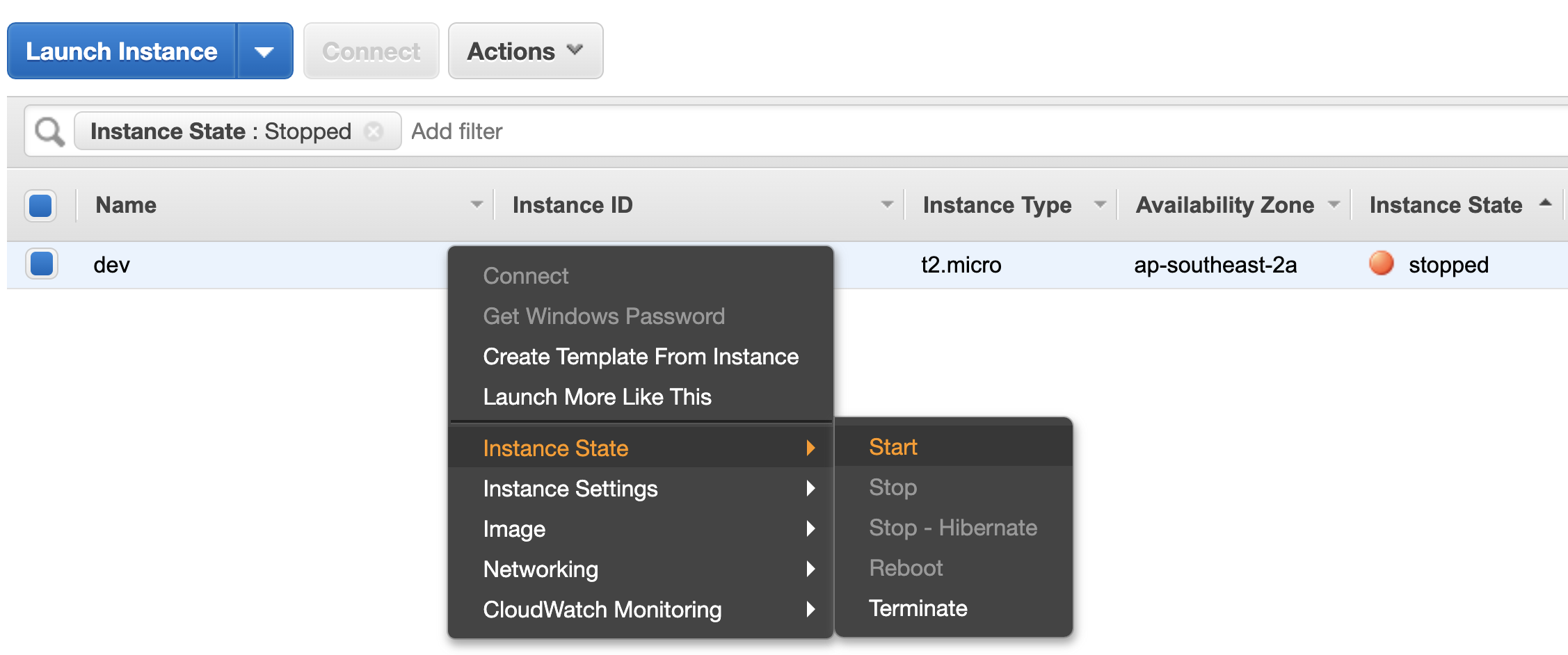

Simply login to the AWS Console and start the instances.

Starting RDS instances

Starting RDS instances Autoscaling Groups

Autoscaling Groups EC2

EC2And it won’t affect the schedule if you manually start an instance. Tomorrow when the scheduler tries to start the instance it will just detect it is already started and do nothing.

Conclusion

Recently there’s been a move to a more serverless architecture, where you pay only for the number of requests, computation and storage used for serving customers. But this architecture brings some challenges, such as re-platforming apps, switching technologies and sometimes having to rewrite code.

Until there, we hope to have introduced a way to reduce costs without the obvious impact of re-platforming. This is one of the techniques we use with our customers, while in their journey to serverless. For more, stay tuned in this blog.

At DNX Solutions, we work to bring a better cloud and application experience for digital-native companies in Australia. Our current focus areas are AWS, Well-Architected Solutions, Containers, ECS, Kubernetes, Continuous Integration/Continuous Delivery and Service Mesh. We are always hiring cloud engineers for our Sydney office, focusing on cloud-native concepts. Check our open-source projects at https://github.com/DNXLabs and follow us on Twitter, Linkedin or Facebook.

No spam - just releases, updates, and tech information.

Stay informed on the latest

insights and tech-updates