The missing feature of Cloudwatch Logs.

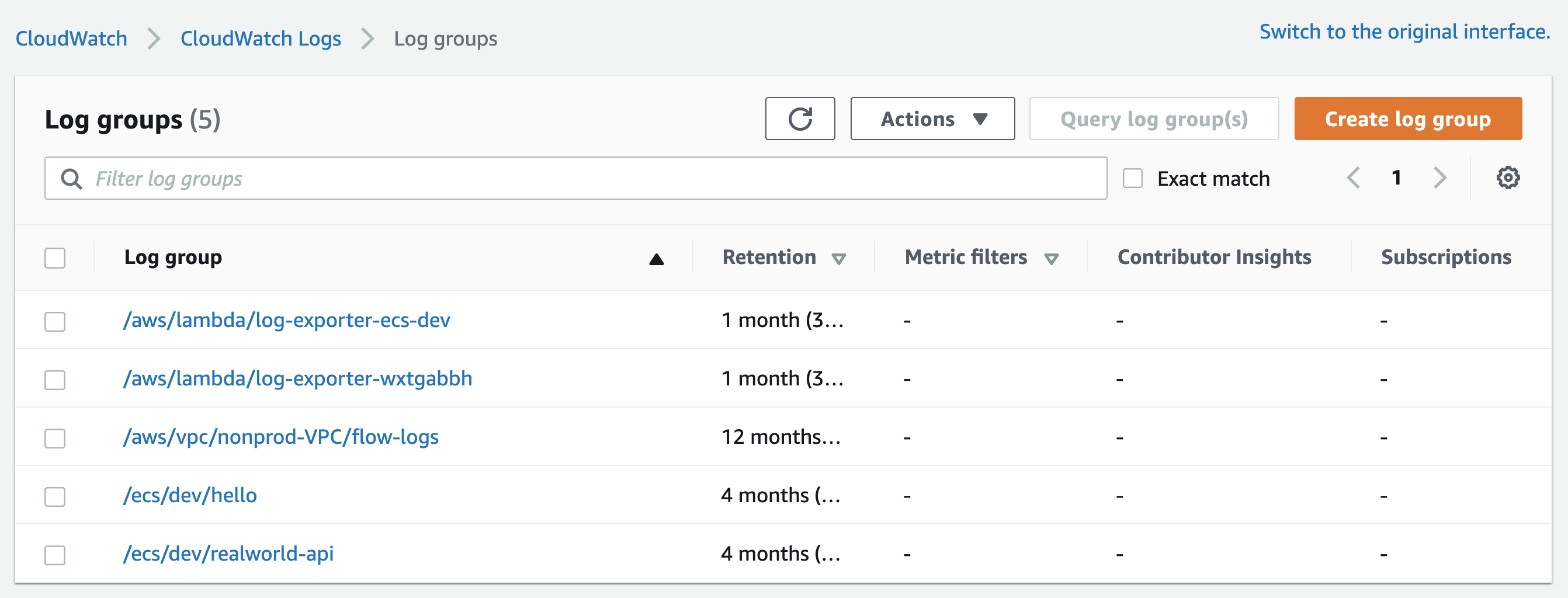

Your Cloudwatch Log Groups could look something like this:

Log groups with Retention

As you might guess, after the retention time, logs are deleted.

And that’s a good approach for keeping the costs under control, but sometimes regulation mandates that logs are stored longer than this period.

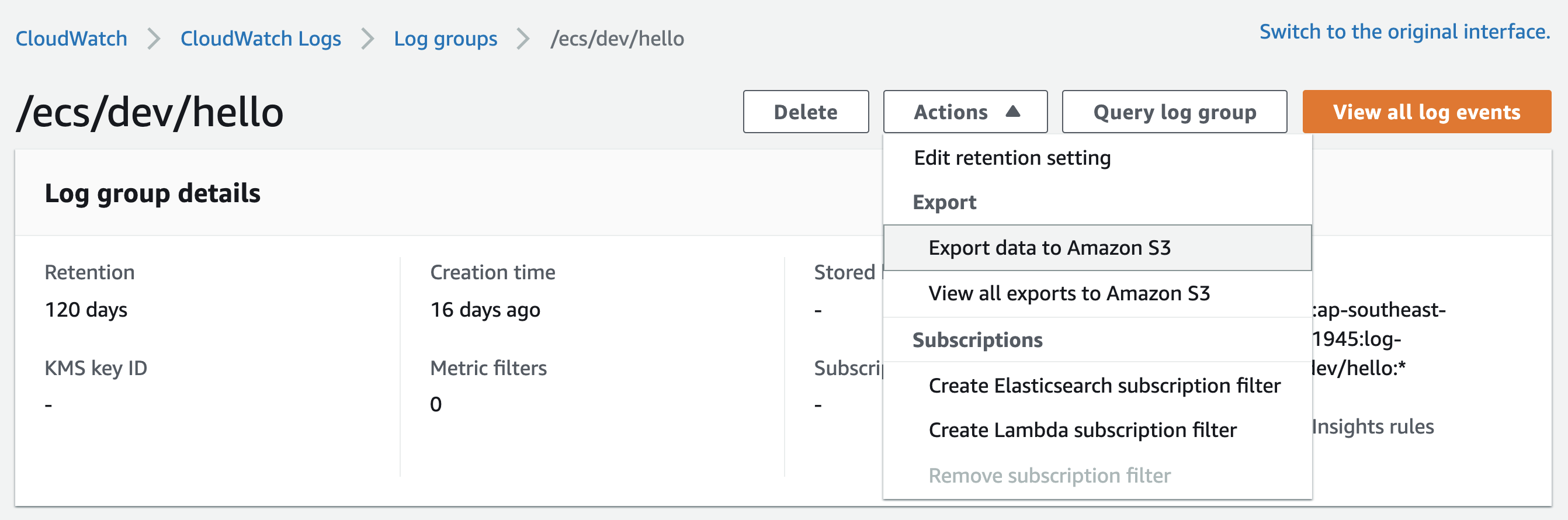

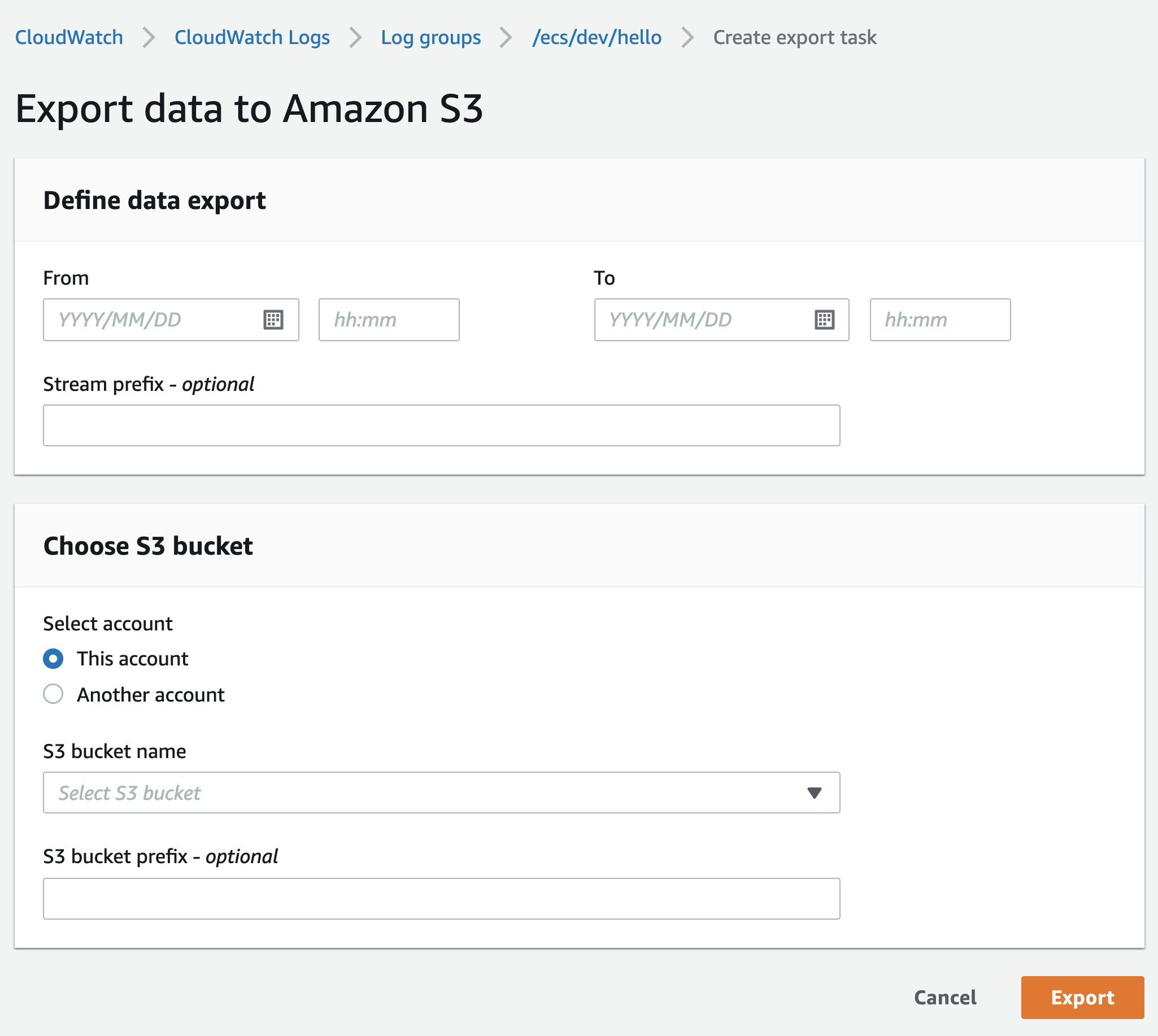

Luckly, AWS allows you to export logs to S3.

But this is a manual operation, there’s no option to export logs periodically and automatically.

But this is a manual operation, there’s no option to export logs periodically and automatically.

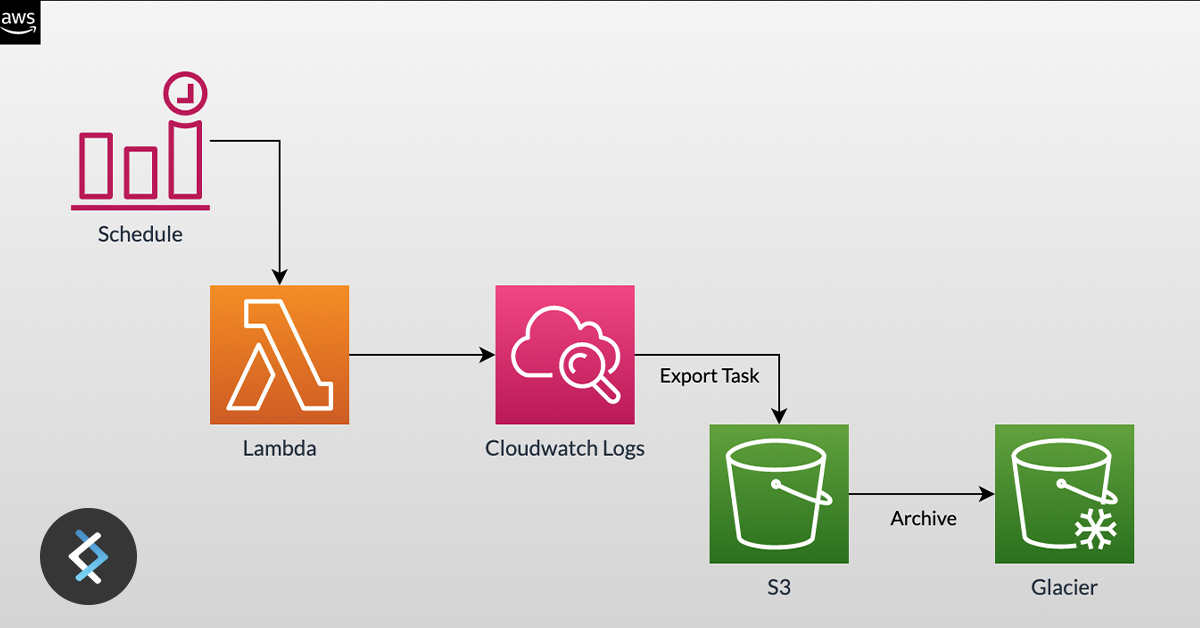

So what we want to achieve here is:

So what we want to achieve here is:

- Every day, export logs of Log Groups to an S3 bucket

- Only export logs that were not exported before

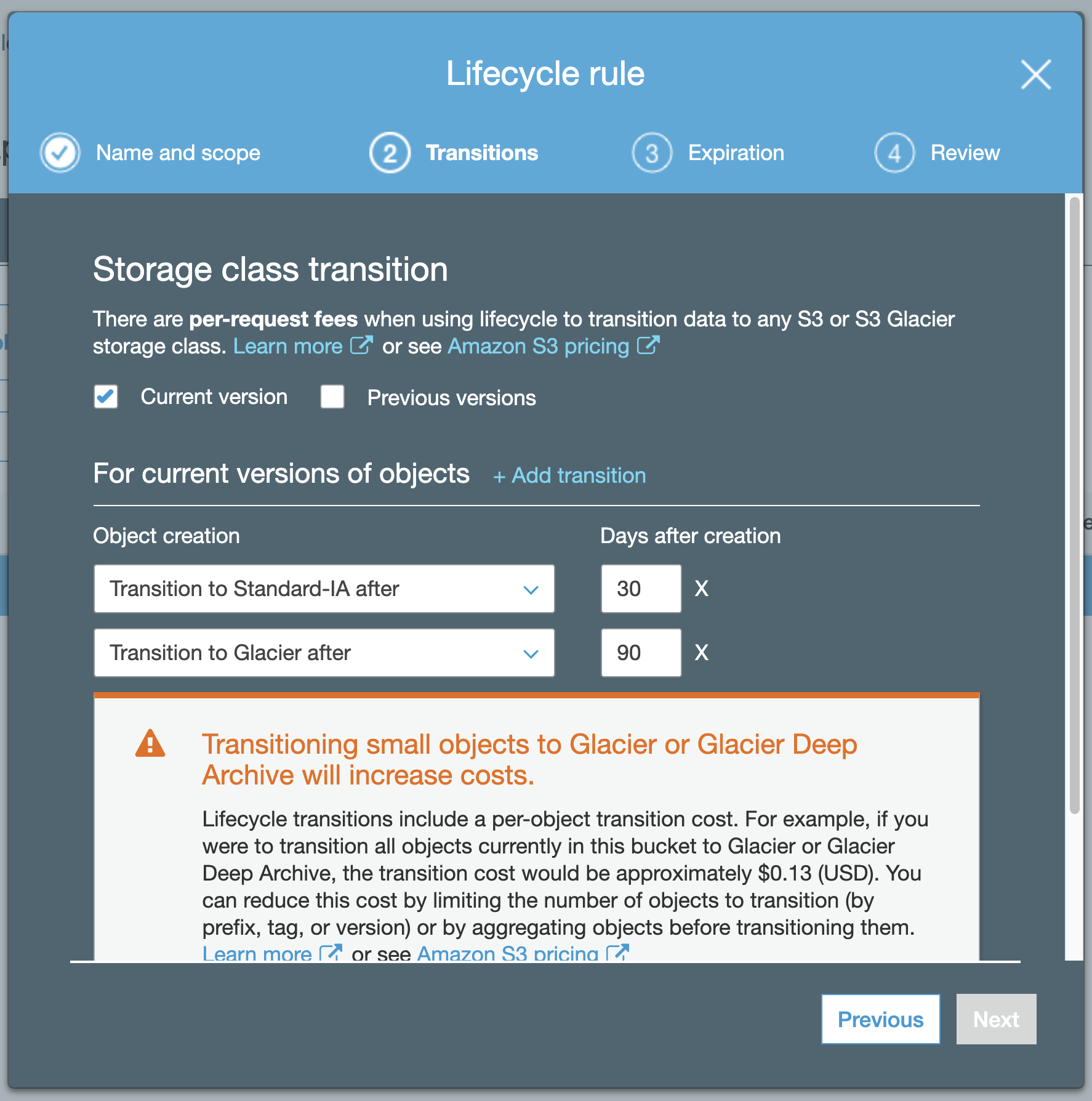

- Archive the logs with S3 Glacier for even lower costs

Exporting logs of Log Groups to an S3 bucket

Let’s get to the reason you’re here. Here’s the lambda function:

JTNDc2NyaXB0JTIwc3JjJTNEJTIyaHR0cHMlM0ElMkYlMkZnaXN0LmdpdGh1Yi5jb20lMkZhZGVub3QlMkZhYjdiM2NlM2M4NjlkMDQwNDRhYzFjMTdmODNiZjcwYi5qcyUyMiUzRSUzQyUyRnNjcmlwdCUzRQ==

At a glance:

- Env variable

S3_BUCKETneeds to be set. It’s the bucket to export the logs. - Creates a Cloudwatch Logs Export Task

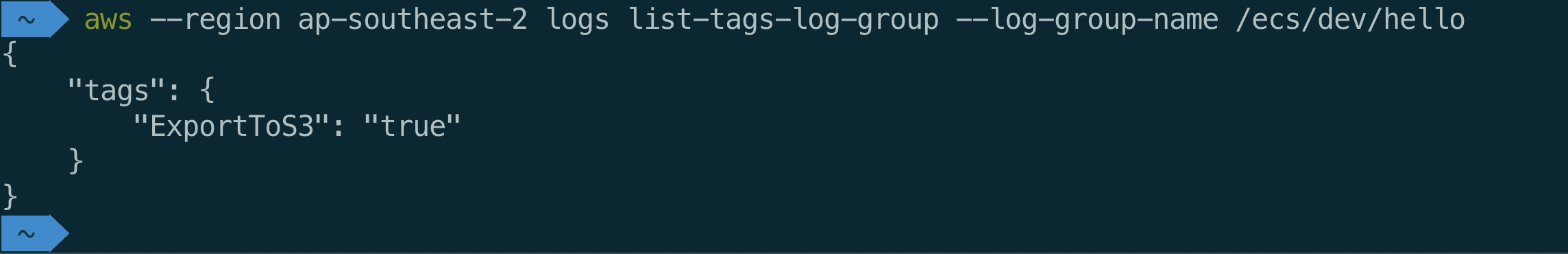

- It only exports logs from Log Groups that have a tag

ExportToS3=true - It will use the log group name as the prefix folder when exporting

- Saves a checkpoint in SSM so it exports from that timestamp next time

- Only exports if 24 hours have passed from the last checkpoint

One caveat I found was that AWS only allows one Export Task running per account. This means when the lambda function was trying to export multiple log groups at once, I got a LimitExceededException error.

To mitigate that, I added a time.sleep(5) that seems to be enough to allow an Export Task to finish and next one can start.

But, there might be cases where the Export Task takes more than 5 seconds or you have too many logs groups and the lambda execution time is not enough to export all of them.

In this case I recommend running the lambda every 4 hours. It will only export log groups that haven’t been exported for 24 hours, so it’s safe to do without causing overlapping logs to be exported.

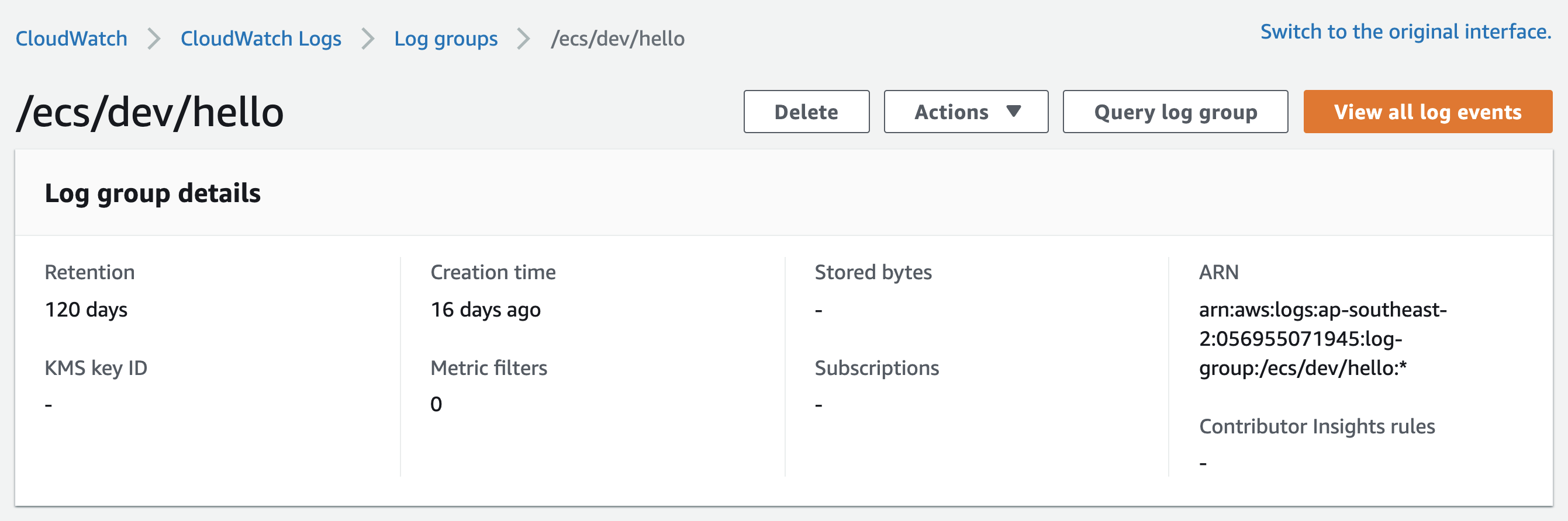

But wait a minute. CloudWatch Log Groups doesn’t have tags!

Where are the tags?

Where are the tags?That’s right. The AWS Console show no option to list or edit tags.

But they are there, I promise!

You can set a tag to a log group using the command:

You can set a tag to a log group using the command:

aws --region ap-southeast-2 logs tag-log-group --log-group-name /ecs/dev/hello --tags ExportToS3=true

How about IAM policies?

To avoid this blog running for more 10 pages (or should I say, screens), here’s a link to the Terraform file that builds the policies for the Lambda function:

S3 Bucket and moving to Glacier

Check the following docs from AWS: https://docs.aws.amazon.com/AmazonCloudWatch/latest/logs/S3ExportTasksConsole.html

Alternatively (another shameless plug), you can look at our Terraform module that builds the S3 bucket and set the right policies (on a multi-account environment): https://github.com/DNXLabs/terraform-aws-audit-buckets

Our final policy for the bucket looks like this:

JTNDc2NyaXB0JTIwc3JjJTNEJTIyaHR0cHMlM0ElMkYlMkZnaXN0LmdpdGh1Yi5jb20lMkZhZGVub3QlMkYyZjFlMWM5MjljMmVkNmQ2ZTc5YzQzOGQyMmQ0NmRmZi5qcyUyMiUzRSUzQyUyRnNjcmlwdCUzRQ==

Replace <NAME OF THIS BUCKET> with the bucket name created and <EXTERNAL ACCOUNT> with any external AWS accounts you with to allow to export logs from.

And for archiving it to Glacier, the Lifecycle rule is:

Conclusion

Conclusion

We hope this article helped you setting up a more compliant, automated and cost-efficient infrastructure.

If you are interested in infrastructure-as-code, please check out our plethora of Terraform modules, Docker images and CLIs that help our clients to automate their infrastructure at https://modules.dnx.one

[html_block id=”1164″]

[html_block id=”1336″]